What Is AI Chatbot Security and Why It Is Important?

Chatbots have become highly important for all aspects of our work and life. These software applications are used widely across apps and websites of all organizations and from all sectors. From finance websites to retail apps, we can find highly efficient AI chatbots helping users get the information they need.

In addition, we also have highly advanced generative AI chatbots like ChatGPT and Gemini that have been revolutionizing many business aspects, including generating code faster and swiftly generating marketing content.

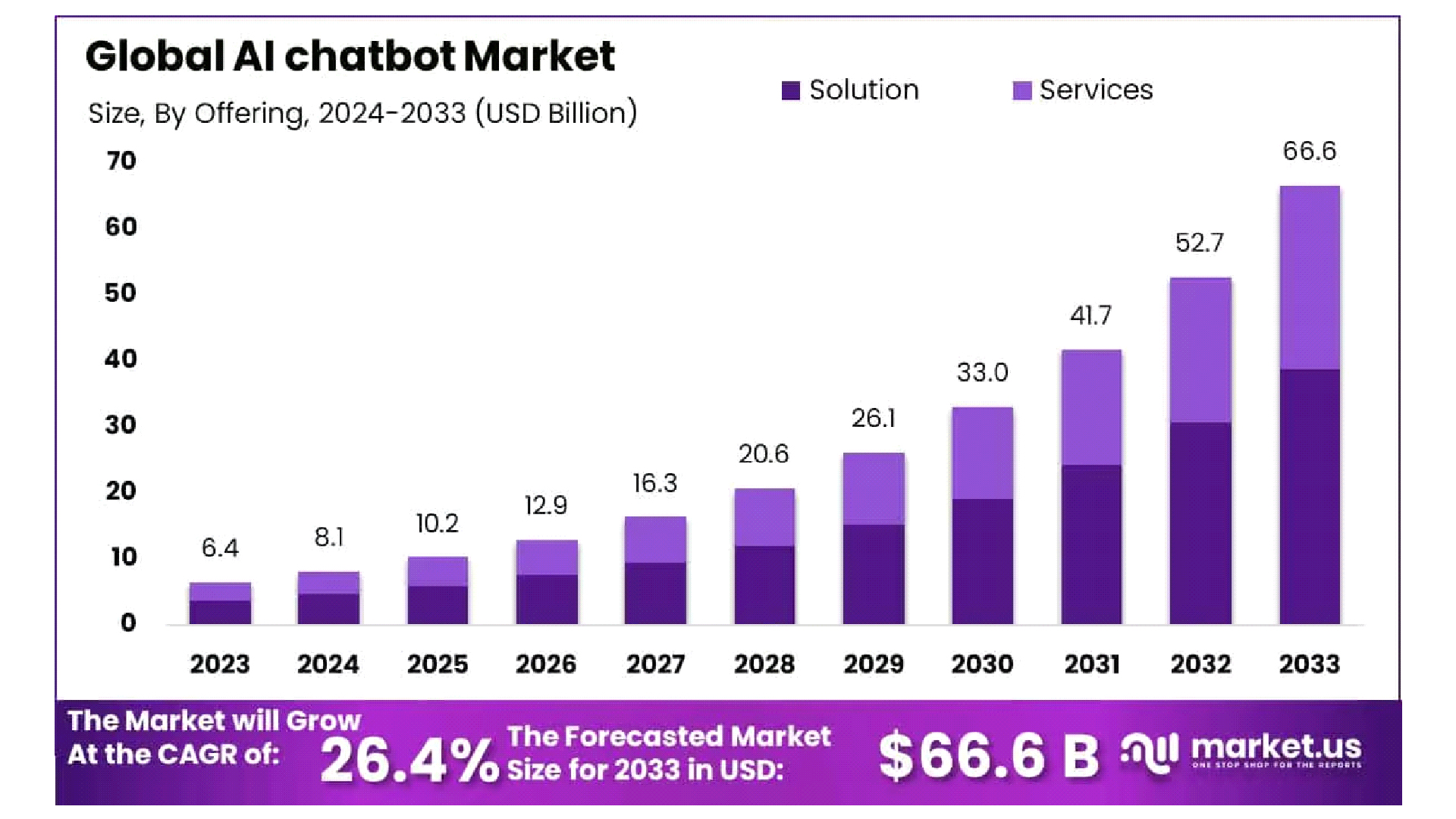

Their widespread adoption and huge applications have led to a boost in the Global AI Chatbot market which is expected to reach $20.6 billion by 2028 (Source: market.us).

As their popularity is increasing rapidly and attracting a huge user base, they have also become prime targets for cyber attackers too. This has led to the increasing importance of AI chatbot security. Of course, they are properly trained, tested, and deployed; still, vulnerabilities lie and raise concerns regarding the tool and their users' security.

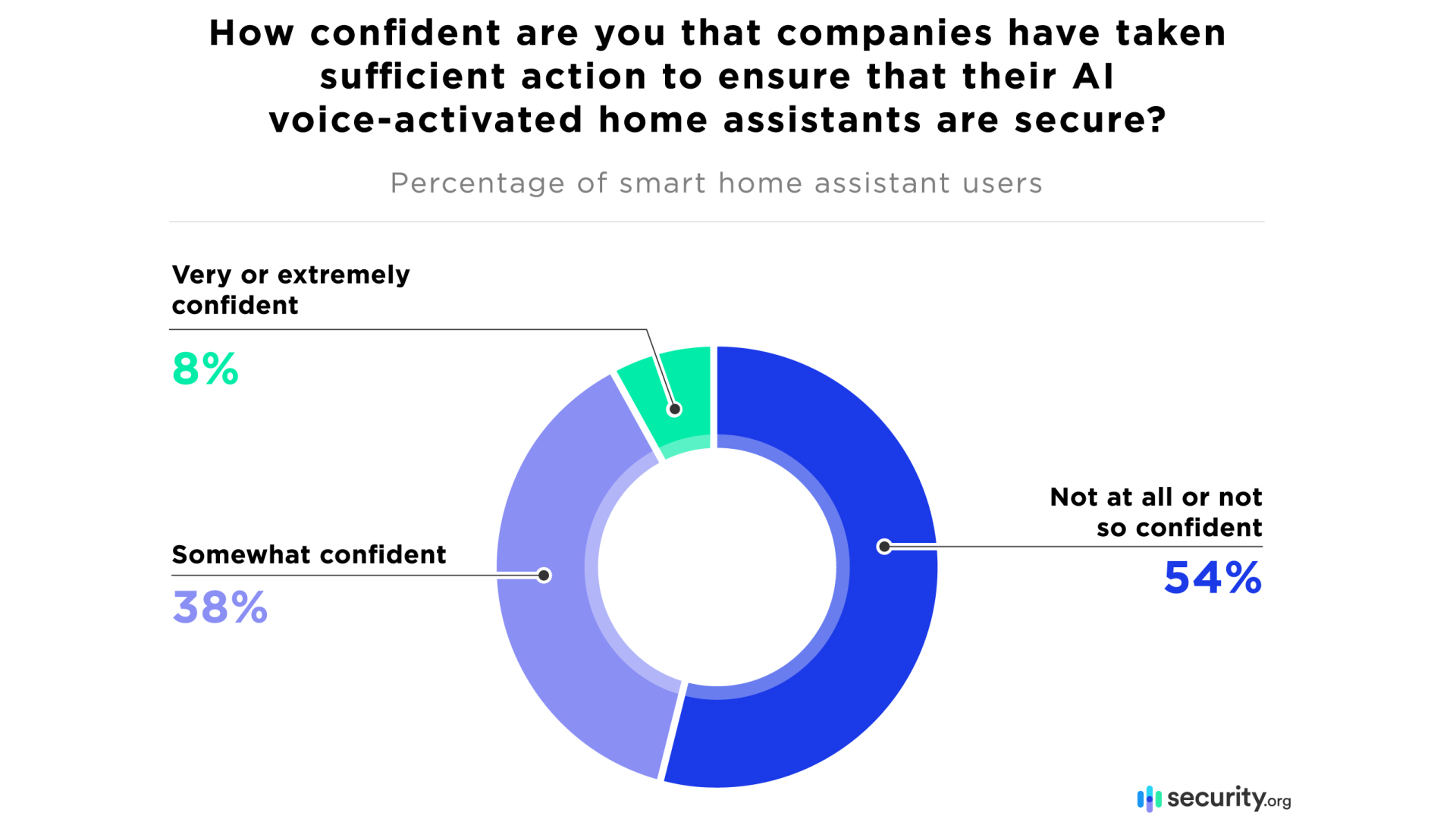

For example, we all use advanced voice assistant apps and devices like Alexa, Google Home, Siri, etc. And here’s what users feel about their security:

Let us understand how organizations can leverage AI chatbots without compromising security.

Is AI Chatbots Secure?

Whenever we interact with AI chatbots on native platforms or websites, we often share personal and confidential information. These chatbots are interconnected with both organizations as well as the internet which makes them powerful but also creates a vulnerability point and makes them susceptible to security breaches.

Several studies and experiments found that AI chatbots can be used for attacks like prompt injection and other malicious applications. Thus, chatbot security is one of the most important security considerations for business now.

AI chatbot security simply refers to the practices and methods that can help protect chatbots and users from a variety of cybersecurity threats and vulnerabilities. Implementing correct cybersecurity measures for AI chatbots ensures they are protected from unauthorized access, data breaches, and being used to generate phishing content.

The most recent example is the DeepSeek cyber-attack which forced the Chinese AI company to stop new user registration. DeepSeek, known for its popular AI assistant, has suffered a significant data breach and DDoS Attack. Cybersecurity firm Wiz discovered an unsecured, publicly accessible database containing over a million lines of sensitive DeepSeek data. This included chat logs, API secrets, and operational details. While DeepSeek promptly secured the database after being notified, the exposure raises concerns about potential prior access by malicious actors. The incident highlights the security risks faced by rapidly growing AI companies and the sensitivity of the data they handle.

Concerning Chatbot Security Risks for Enterprises

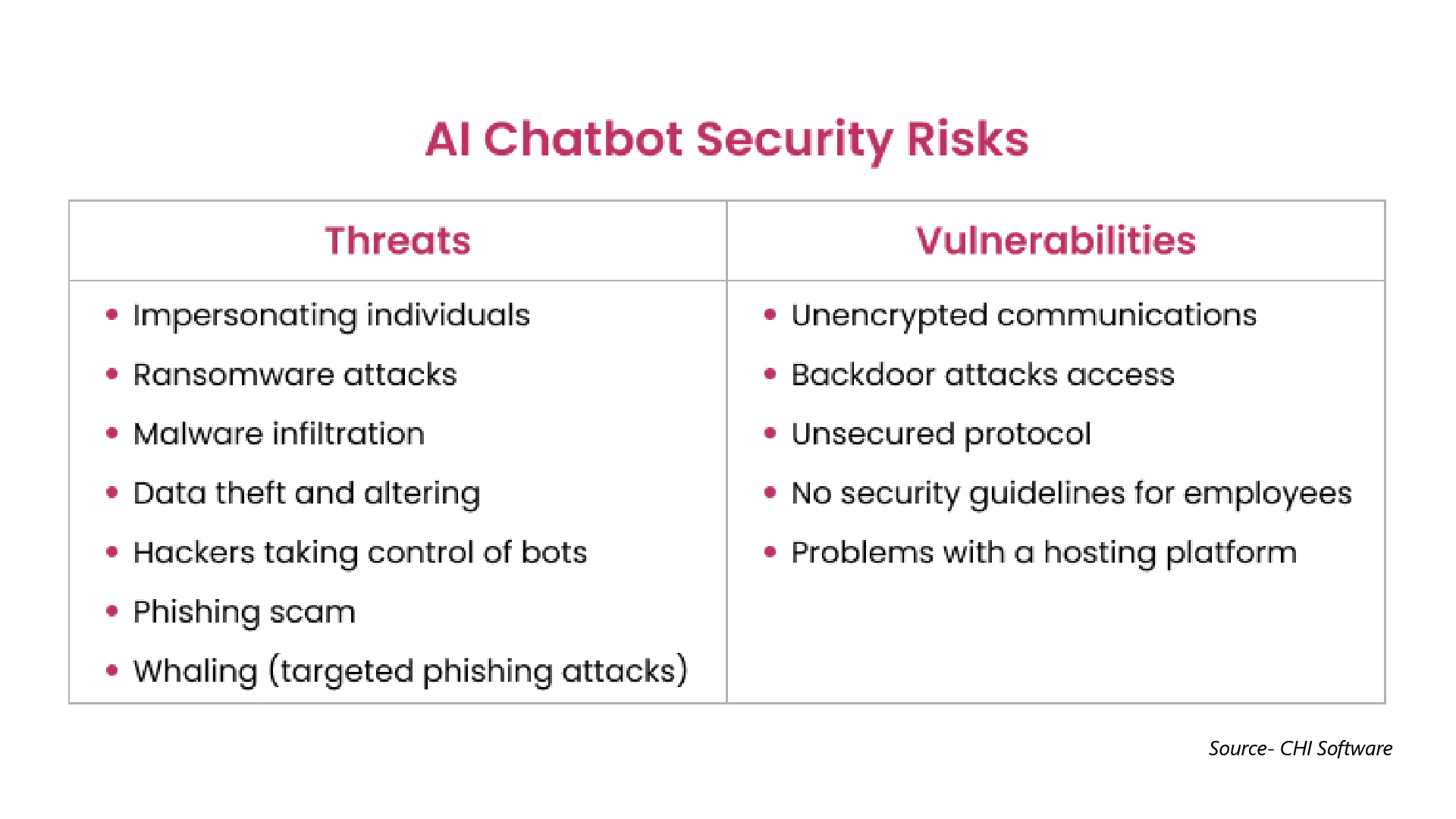

There are basically two types of security challenges for AI chatbots

Let’s discuss some of these in detail.

-

Data Leakage

AI chatbots can collect users’ sensitive information such as personally identifiable information (PII), financial information, or health records.

It therefore becomes important to protect these data otherwise they can be exposed because of programming or configuration errors, and malicious attacks. Such threats can further lead to identity theft and unauthorized transactions that can cause huge damage.

-

Prompt Injection

Prompt injection is a type of threat in which the attackers generate misleading prompts and commands that change the chatbot’s behavior and then it leads these AI tools to do things that they shouldn’t be doing.

If a prompt injection becomes successful, then it can lead to:

- Revealing sensitive user information or confidential business data

- Performing actions that are beyond what it has been designed to and cause harm to other systems

- Spreading generates misinformation and causes distrust among users

-

Phishing and Scams

AI chatbots can be useful tools to craft targeted social engineering attacks including phishing and scams. So, attackers can manipulate chatbots and trick users into revealing sensitive and confidential information.

The recent DHL Chatbot Scam is a great example where scammers used this tool to impersonate a shipping company and then stole customer data.

-

Malware and Cyber Attacks

Another big concern is these tools can also be exploited and used for spreading malware. It is done by identifying vulnerabilities in the chatbot’s code and using it to exploit user inputs.

Usually, cybercriminals inject malicious codes or links into the chatbot’s responses and when users click those links, they can download malware which then further infects their system, corrupts files, steals data, and causes other harm.

-

Misinformation

AI-powered chatbots can also spread information if not properly tuned and tested. If the models are trained on biased or inaccurate data, then their output can be controversial.

How To Ensure AI Chatbots Security?

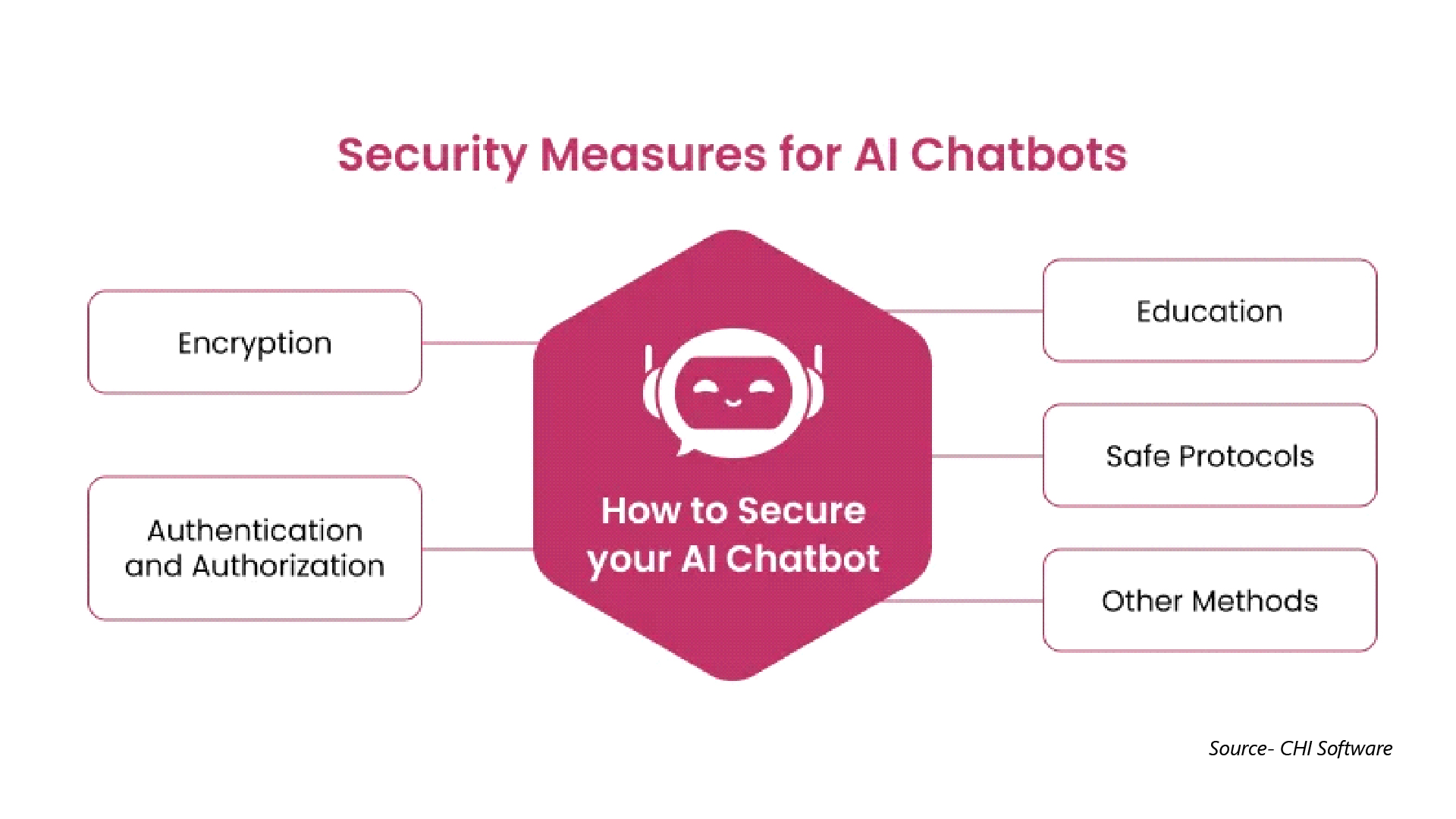

As we saw, AI chatbots can be prone to various kinds of cybersecurity threats and risks, a comprehensive and multi-layered approach is needed which involves:

-

Robust Data Governance

This is essential to safeguard the foundation. The chatbot developers must implement strict controls over the data they use to train models. It will ensure the model generates accurate and useful information. This includes – data validation, filtration, and access control measures. Moreover, they should also conduct regular audits and monitor training data to detect and prevent data poisoning.

-

Model Security

Developers and cybersecurity specialists should then prioritize shielding the core technology to ensure the overall security of the model. It involves techniques like differential privacy and federated learning which is useful to protect AI chatbots from extraction and adversarial attacks.

If developers add noise to the training data and distribute the training process across various devices, then it will make it difficult for attackers to extract or manipulate the model behavior.

-

Input validation and Sanitization

This process refers to filtering malicious inputs. Chatbots must be designed to handle malicious inputs properly. This can be achieved by implementing efficient filtration mechanisms where they can detect and block harmful inputs.

-

Authentication and Authorization

Authentication and authorization are essential to verify the identity of users and restrict access to sensitive information based on user roles and permissions. Techniques like multi-factor authentication help add an extra layer of security that makes it difficult for attackers to gain unauthorized access even if they have access to the user’s credentials.

-

End-to-end encryption

The encrypted chat ensures only the sender and receiver have access to the content in the chat. Therefore, advanced encryption techniques are considered the most secure way to maintain privacy in AI chatbots.

User Awareness and Training

Apart from these core technical security measures, organizations and cybersecurity specialists must spread proper awareness and training among users and employees which involves:

- Offering free cybersecurity training to all employees regarding the best cybersecurity practices.

- Providing engaging educational newsletters or video tutorials to users with clear and concise instructions on how to use AI chatbots securely.

- UEBA can also analyze how people use chatbots and identify anomalies if someone is trying to break in.

- AI-driven threat detection and response systems can analyze huge amounts of data and find breaches or security threats efficiently.

Cybersecurity certifications are a great way to enhance employee's awareness of the latest cybersecurity threats and their mitigation strategies. These certifications not only cover the technical aspects of cybersecurity but also emphasize secure applications, strategies, and ways to protect data and devices.

Conclusion

AI chatbot security is an ongoing challenge where cyber attackers and cyber security professionals are in continuous combat. Therefore, it requires the security team to maintain constant vigilance and innovation. In the year 2025 and beyond they will become more sophisticated, and their applications will be far more than what they are now. If they are compromised, then they can have serious consequences.

So, it is important to understand the threats and implement robust security measures so that we can fully leverage the power of AI for good.